Why activation function is needed in Neural Networks???

Today allow me to share

with you, the relationship between a spider and an activation

function

Activation function is

used in Neural network to introduce the non-linearity.

Without the proper

activation function, neural network will simply be large Linear Model.

Consider the case

where you have a data which is linearly separable

|

Constructing a

model to separate yellow and orange data points is very simple. A simple

logistic regression will do this job very perfectly

Now consider the

case where your data set looks like something shown below

What do you think now??

will a simple linear model be able to carve out the hyperplane in such a way to

classify both yellow and orange points.

I don’t think so. And it

is the fact that a simple linear model may not be able to find complex patterns

in a data.

So now we go to

big brother of linear model -Neural network.

Neural Network has this

super ability to both carve out linear dependency as well as nonlinear

dependency as per dataset.

How I perceive

activation function is some special kind of function which gives super power to

the simple linear model which helps model to capture complex, nonlinear

patterns.

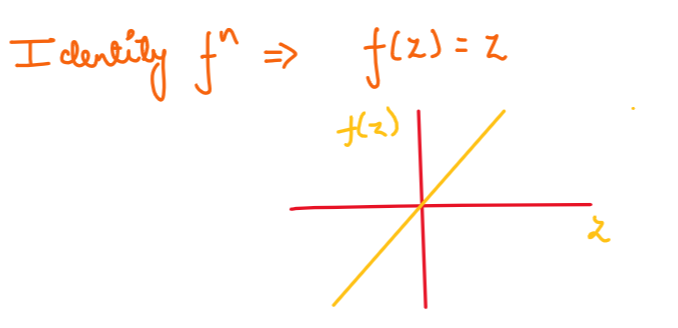

As discussed earlier

neural network with no activation function or simply identity activation function

is just a large Linear Model.

Identity Activation

Function

Some famous activation functions used in NEural NEtorks are

1. Sigmoid Function

2. Rectified Linear Unit (ReLU)

3. Leaky ReLU

Comments

Post a Comment